Care Flow

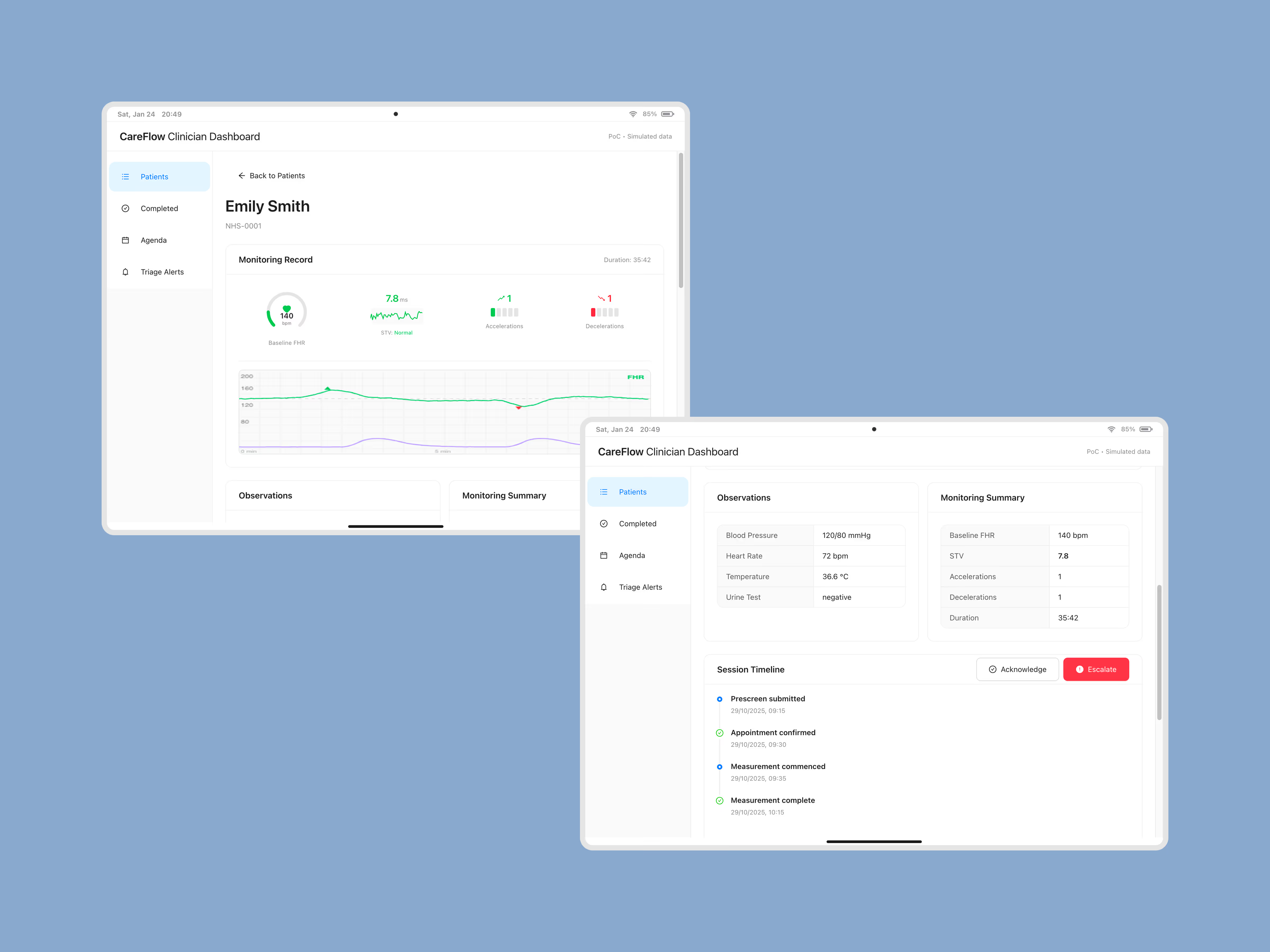

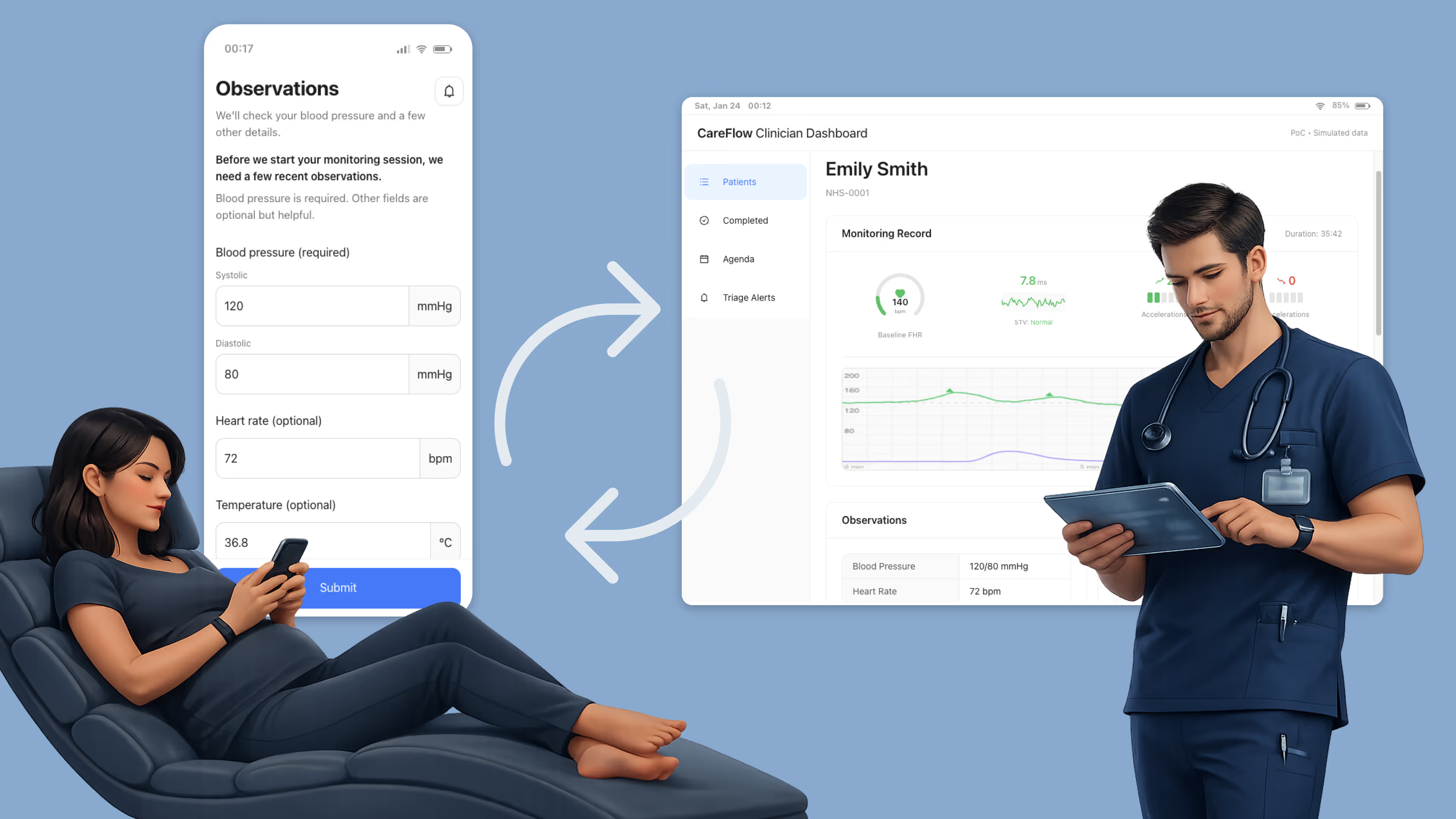

Built an end‑to‑end proof of concept to validate remote pregnancy monitoring — a safety‑first patient flow and a clinician dashboard for triage and review.

PoC snapshot

Timeframe

Nov 2025

↓

7 days of build

3 days of validation

Goal

Validate a safety-first remote pregnancy monitoring workflow with clinician oversight.

Deliverables

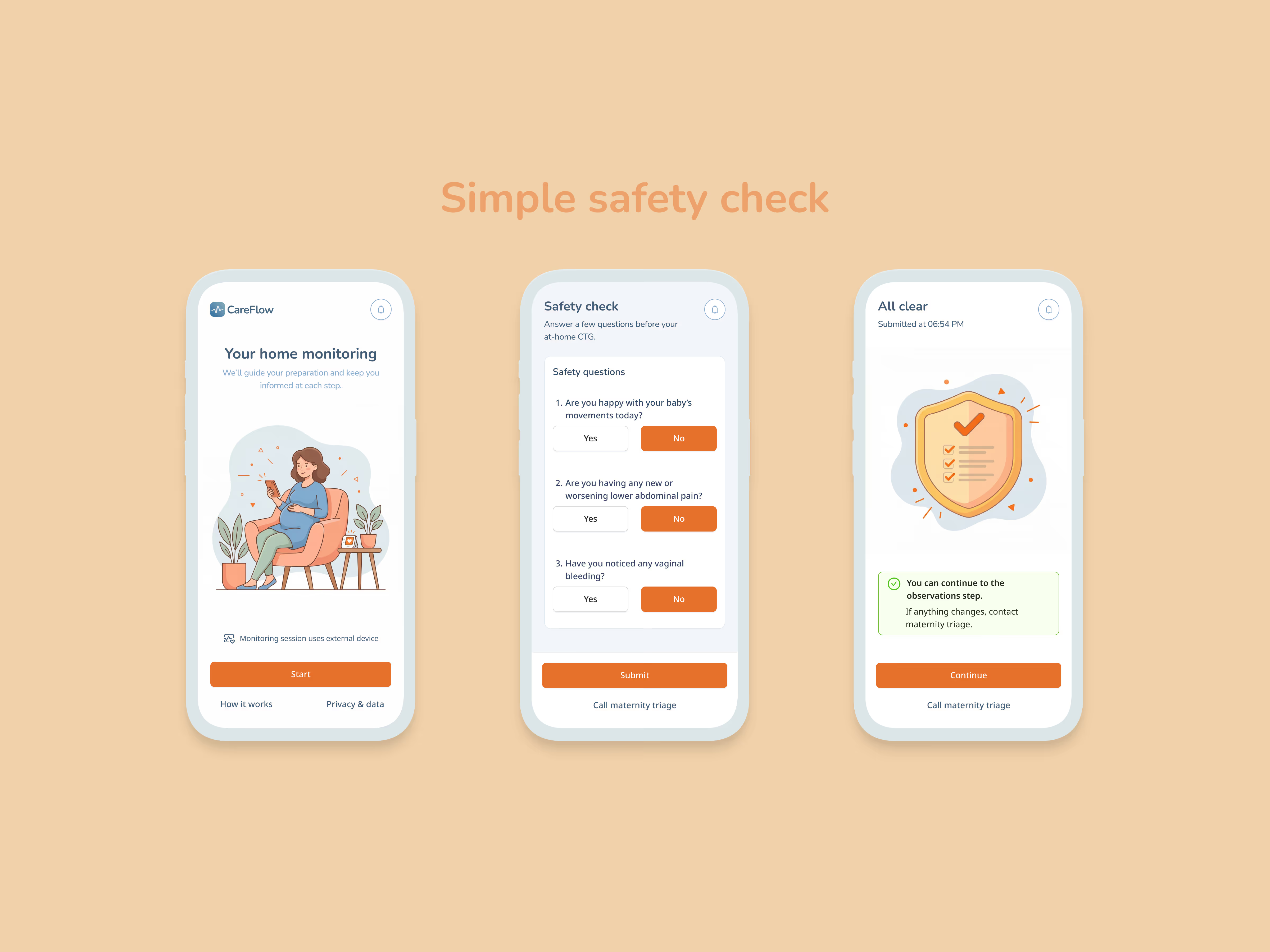

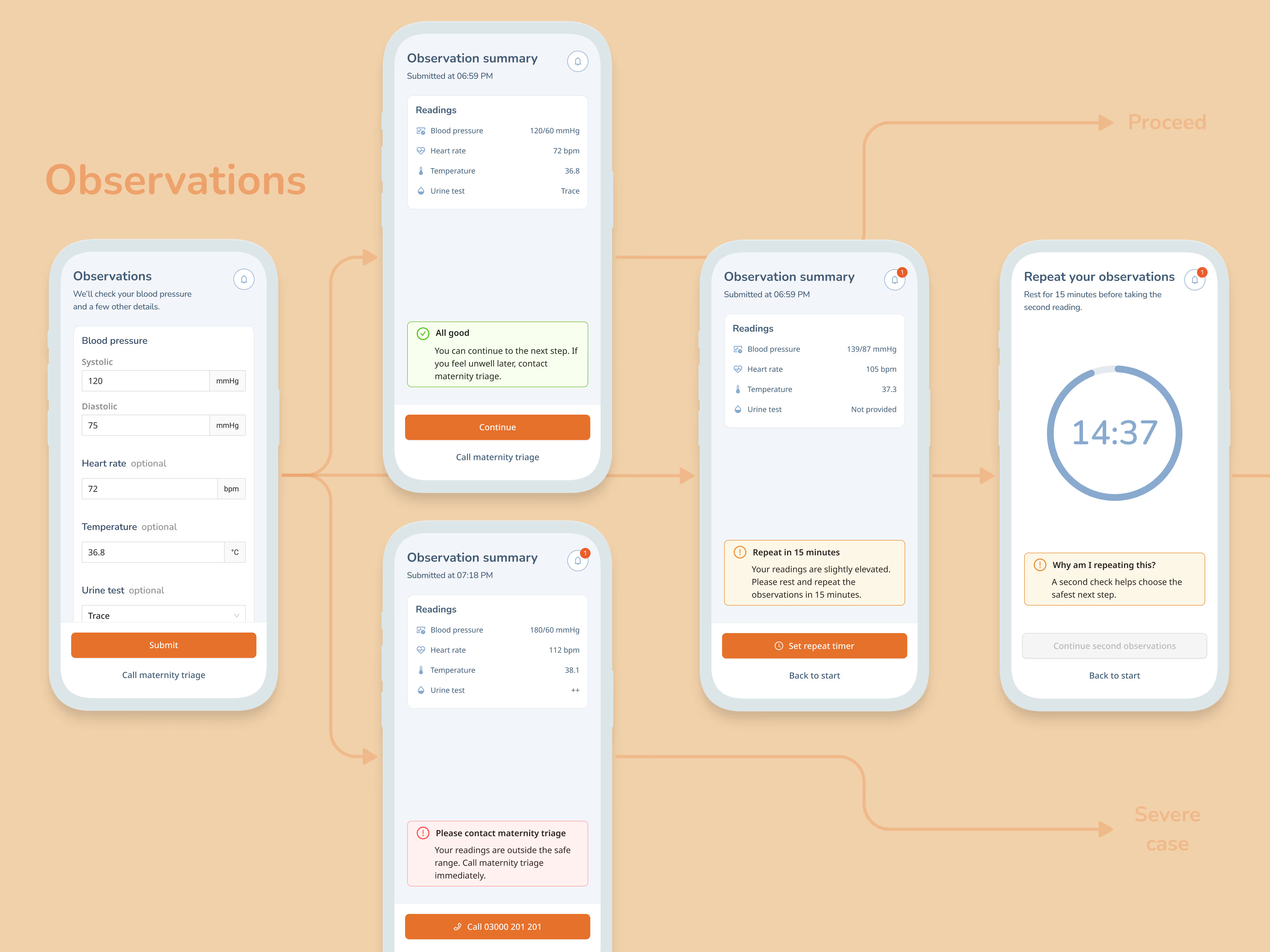

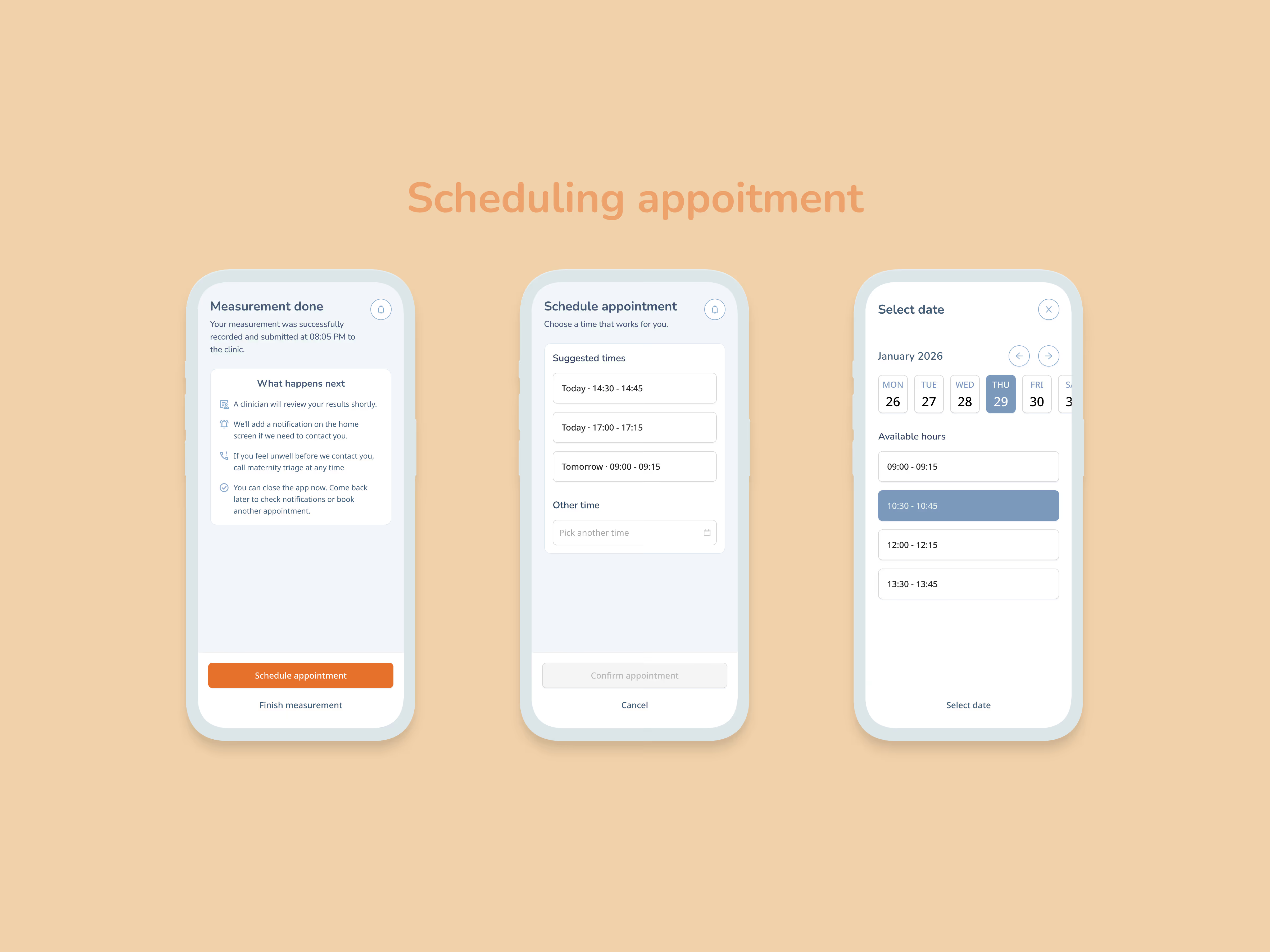

Patient flow → triage, observations, next steps. Not diagnostic.

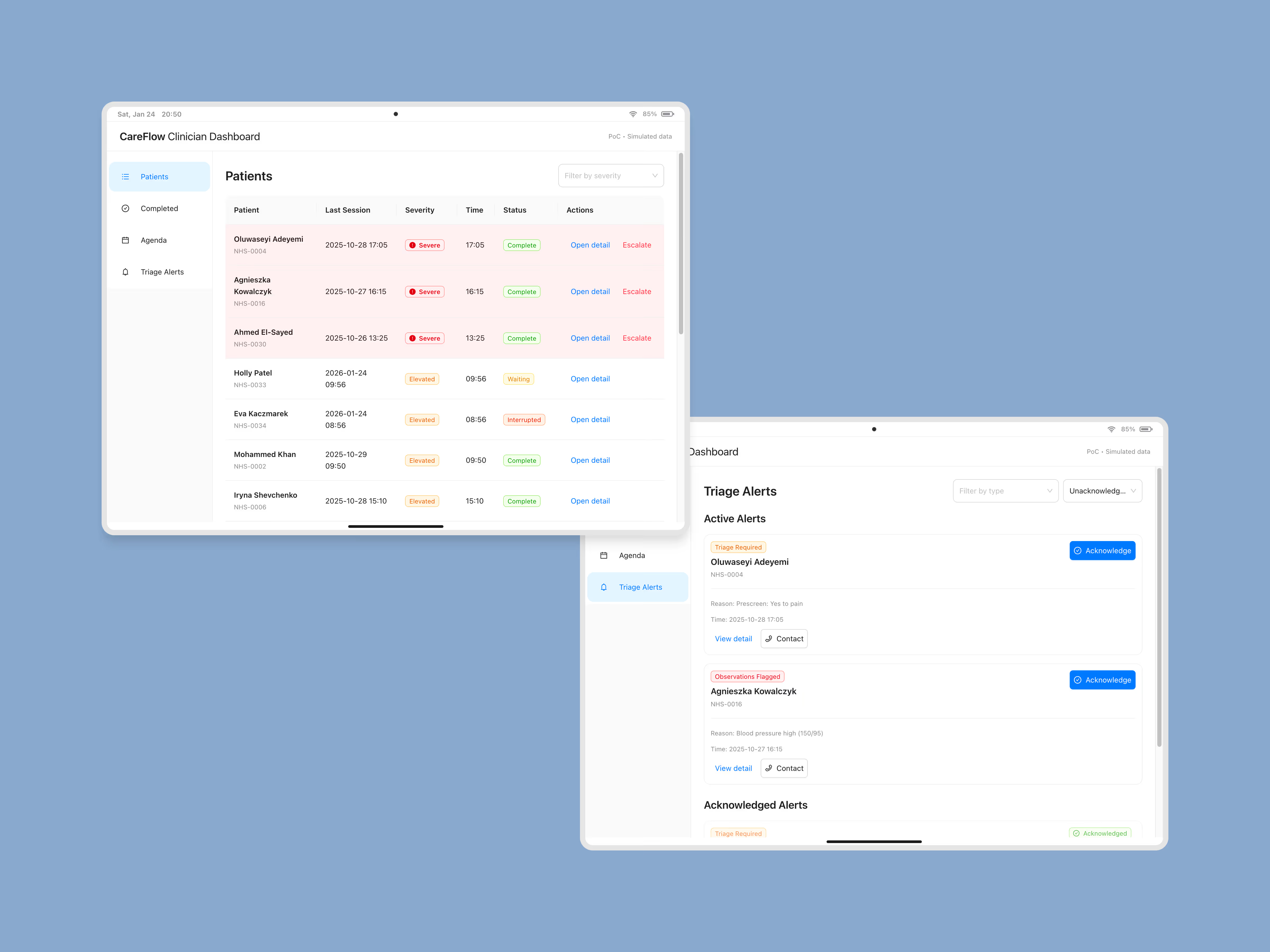

Clinician flow → dashboard, triage review, live monitoring, alerts, patient escalation

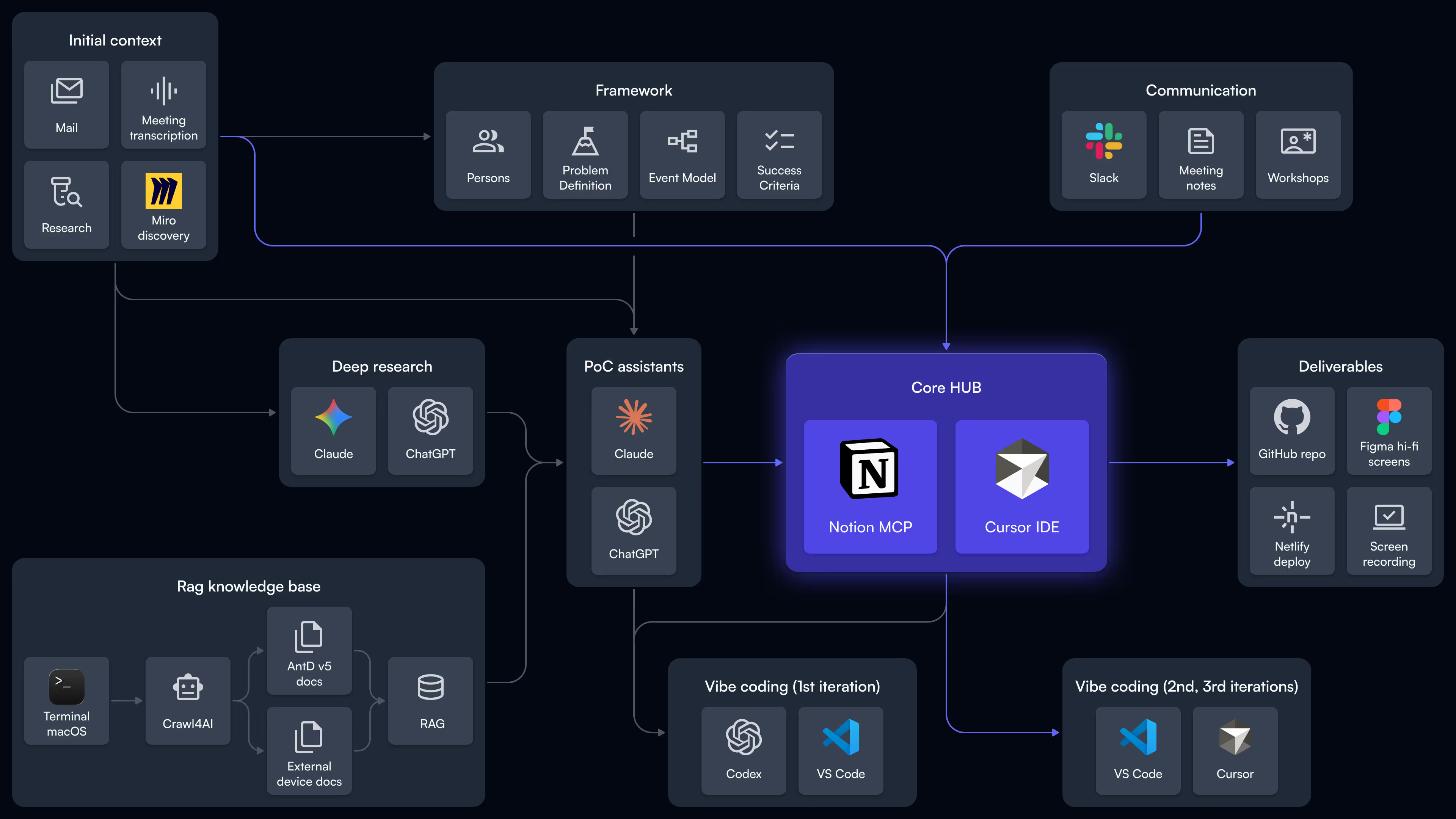

Toolchain

- Notion - SSOT

- AI synthesis - ideation, execution

- Cursor - implementation

- Figma - high fidelity screens

Proof pack

End‑to‑end flow across two personas

Notion as the PoC's knowledge base

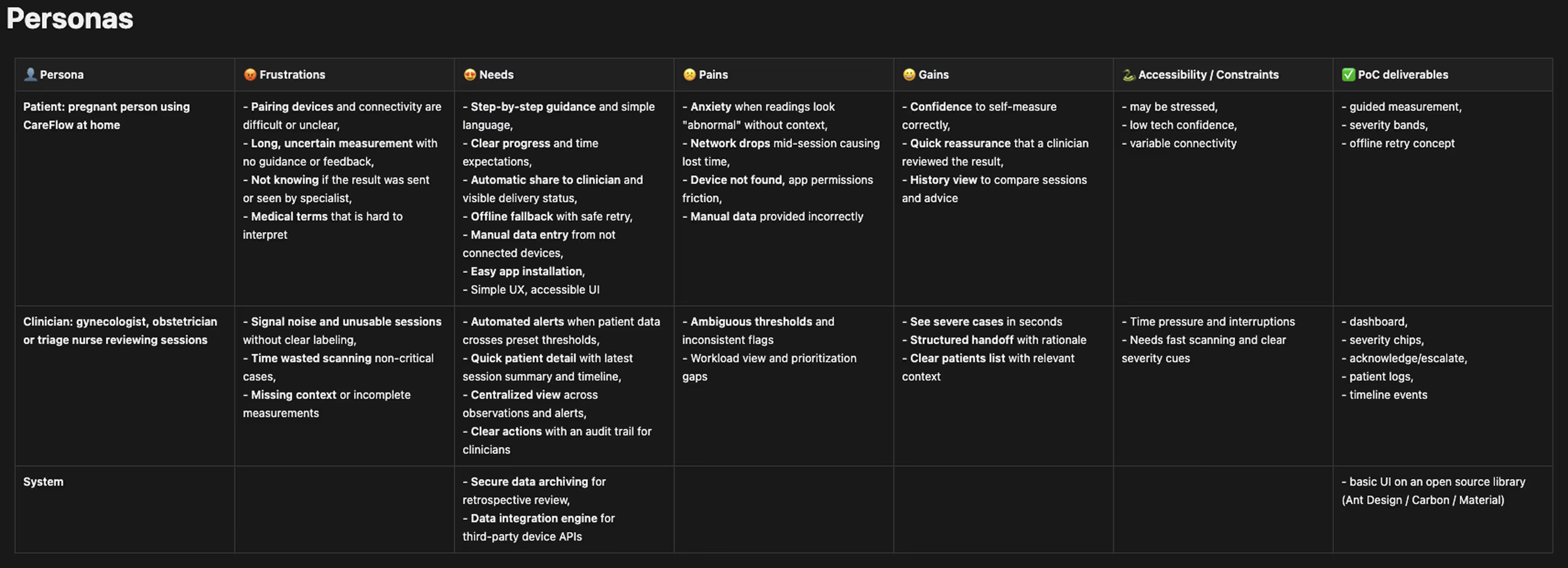

Personas

A structured persona deep-dive to turn them into standardized, implementation-grade context. It captured patient accessibility constraints and clinician system needs in a form that an LLM could reliably use.

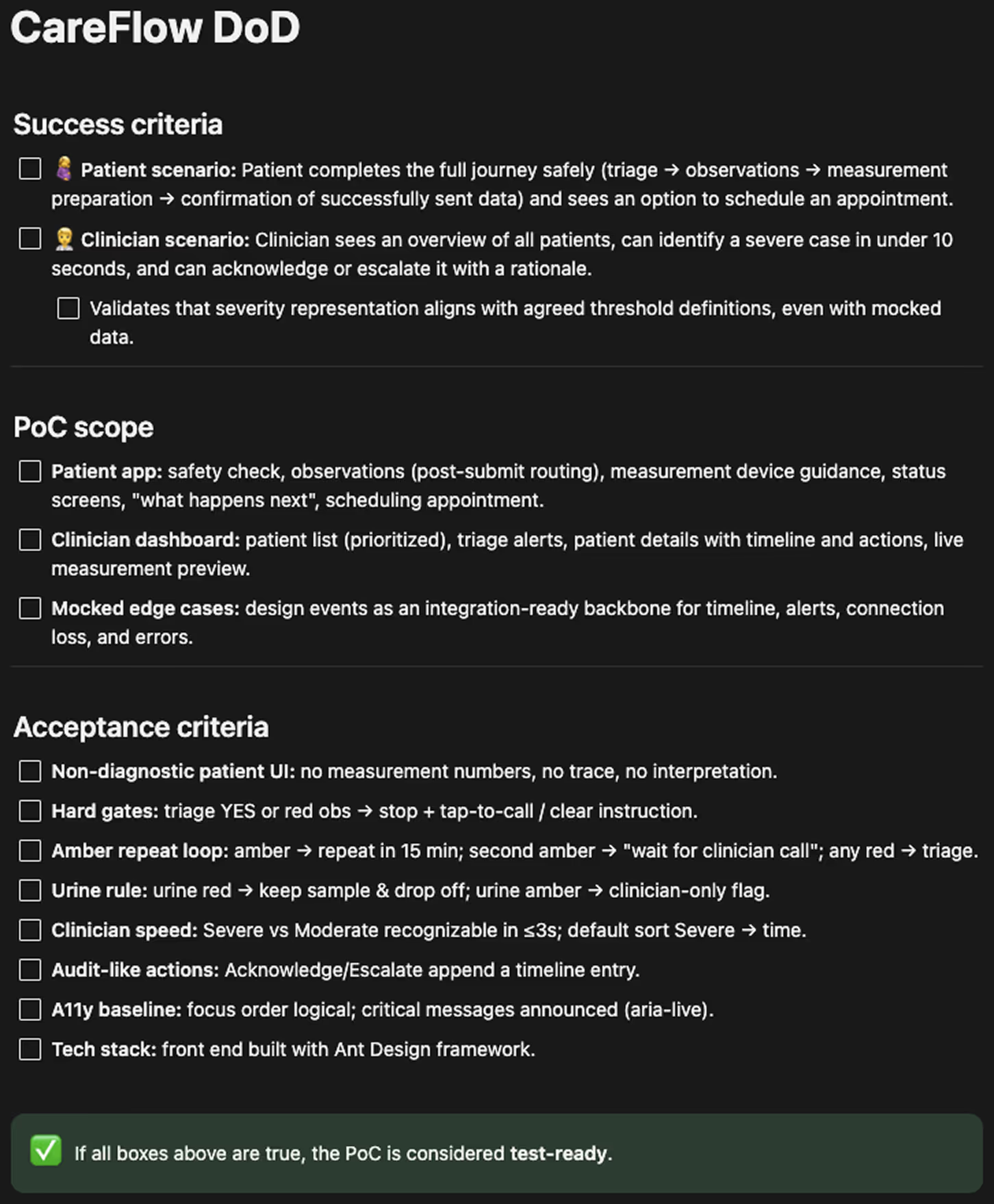

Definition of Done

A "test-ready" spec: success scenarios and acceptance criteria to prevent scope drift and measure quality.

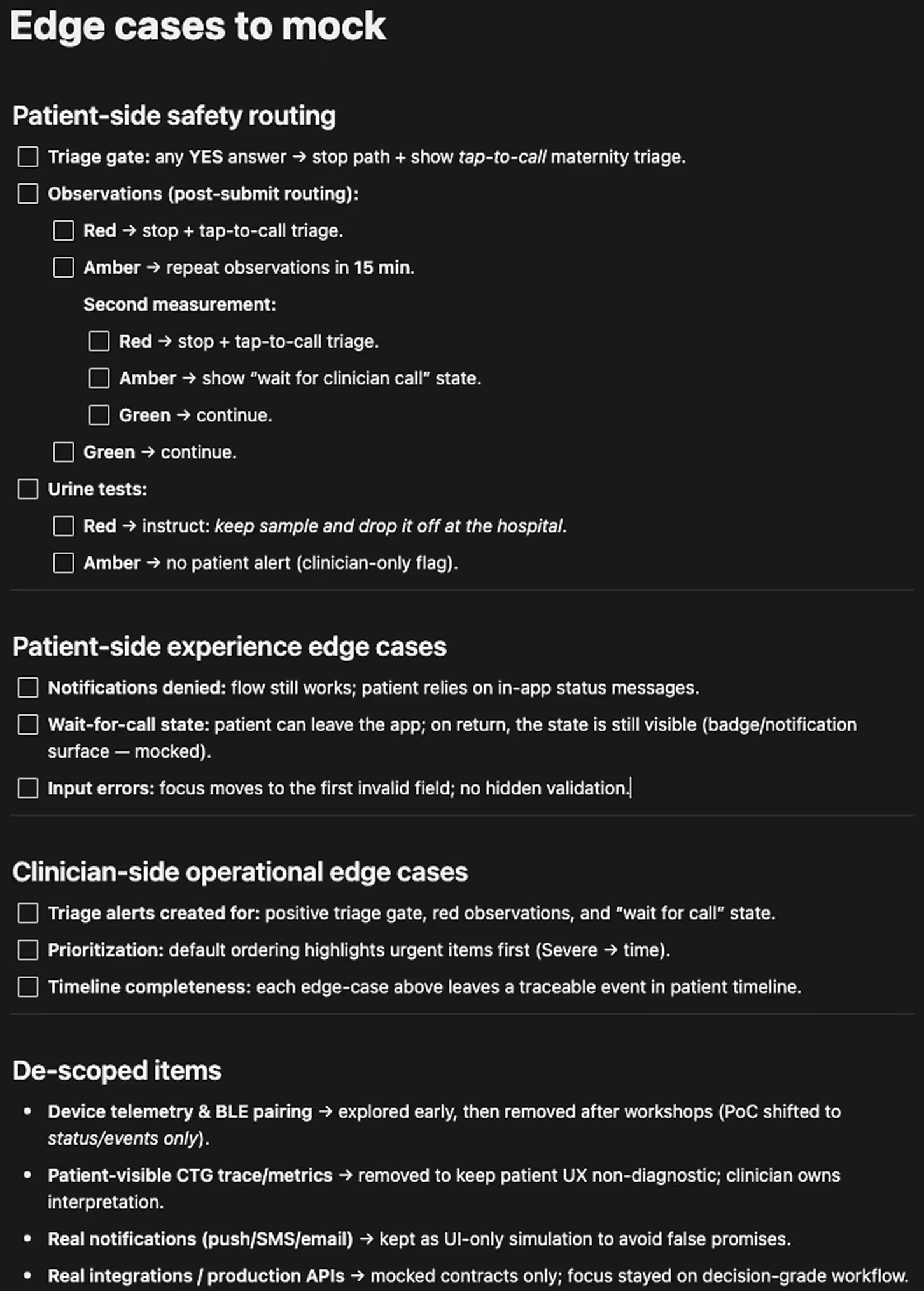

Edge-case rules

An AI-first approach required an explicit edge-case map to keep the demo scalable—allowing to exclude non-critical paths. I captured these in a "mock-ready" form for easy simulation and implementation.

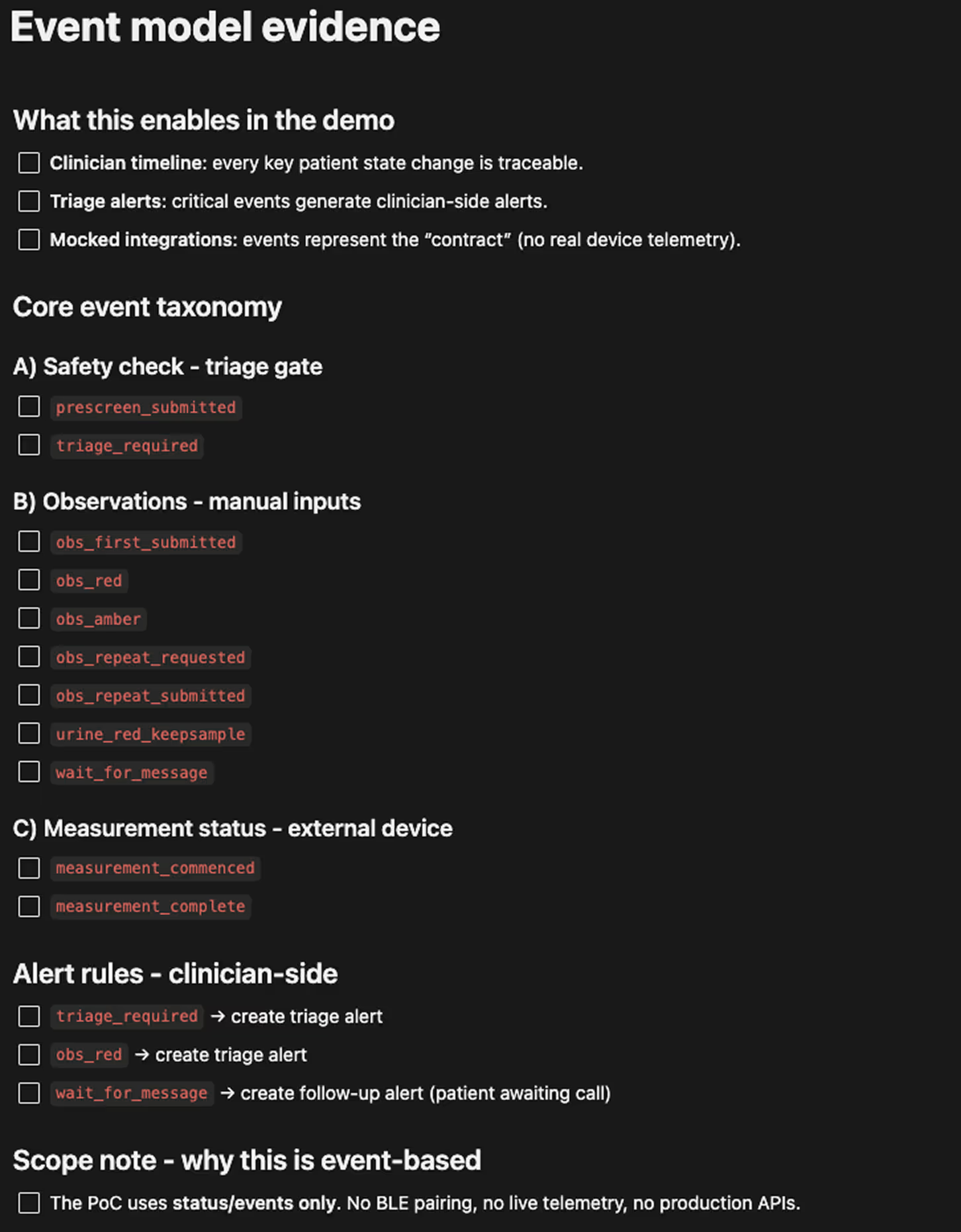

Event model evidence

The event model defined how patient actions propagate into clinician visibility, alerts, and next steps. It ensured valid flowcharts and routing rules, making the demo predictable and easy to control.

Implementation proof

A Cursor-built implementation summary generated by Claude, showing what was actually shipped in code.

Stakes & why now

The goal was to build a decision-grade proof of concept under time pressure using AI tools—and intentionally skip lo-fi and hi-fi screens until the DoD was met.

Why it mattered

- The concept needed a credible, demo-ready proof to align stakeholders and eliminate gaps in shared understanding.

- The goal was to validate a remote pregnancy monitoring workflow with clinician oversight—one that was safe, testable, and operationally realistic.

- I had to compress uncertainty into a tight window and surface clear "go / no-go" signals early.

What was at risk

- Product risk: Accidentally creating a diagnostic patient experience that's unsafe or unclear in critical moments.

- Operational risk: A concept that fails under real triage constraints—queues, prioritization, alerts, time pressure.

- Engineering risk: AI tools that hallucinate, ignore scope, or generate code that's hard to change or scale.

Key constraints

- Patient experience: strictly non‑diagnostic (states + next steps only).

- PoC scope: end‑to‑end dual‑persona flow (patient → clinician), not isolated screens.

- Intentionally excluded real device telemetry / BLE pairing to validate workflow via mocked statuses and events.

- Test‑ready output: requirements, edge cases, event model.

What shaped the PoC

Decision 01

Maximize AI capabilities

The initial decision was to use an AI‑first approach to ship a decision‑grade PoC. I used multiple tools and AI assistants, iterating on prompt methods to generate context and turn messy inputs into structured specs.

The critical part was control—every output passed through quality gates before it could influence the build.

Decision 02

Build a scalable environment from the start

On the technical side, the crucial decision was to build a PoC that stays easy to understand and evolve under pressure. I treated maintainability as a first-class requirement—the project needed to tolerate pivots without collapsing into inconsistencies.

I used common practices to make the environment explicit: a clear data schema, stable DOM and component structure, predictable routing, and flowcharts in Mermaid to get a visual overview.

Decision 03

Use the first demo as the truth serum

After 1st iteration points and cases initially described as knowledge gaps and assumptions were clarified and delivered in 2nd iteration.

I treated iteration 1 as a fast synthesis of the initial medical overview. The first clickable demo was designed to trigger high-signal correction, not polite feedback.

Decision 04

Use open-source solutions

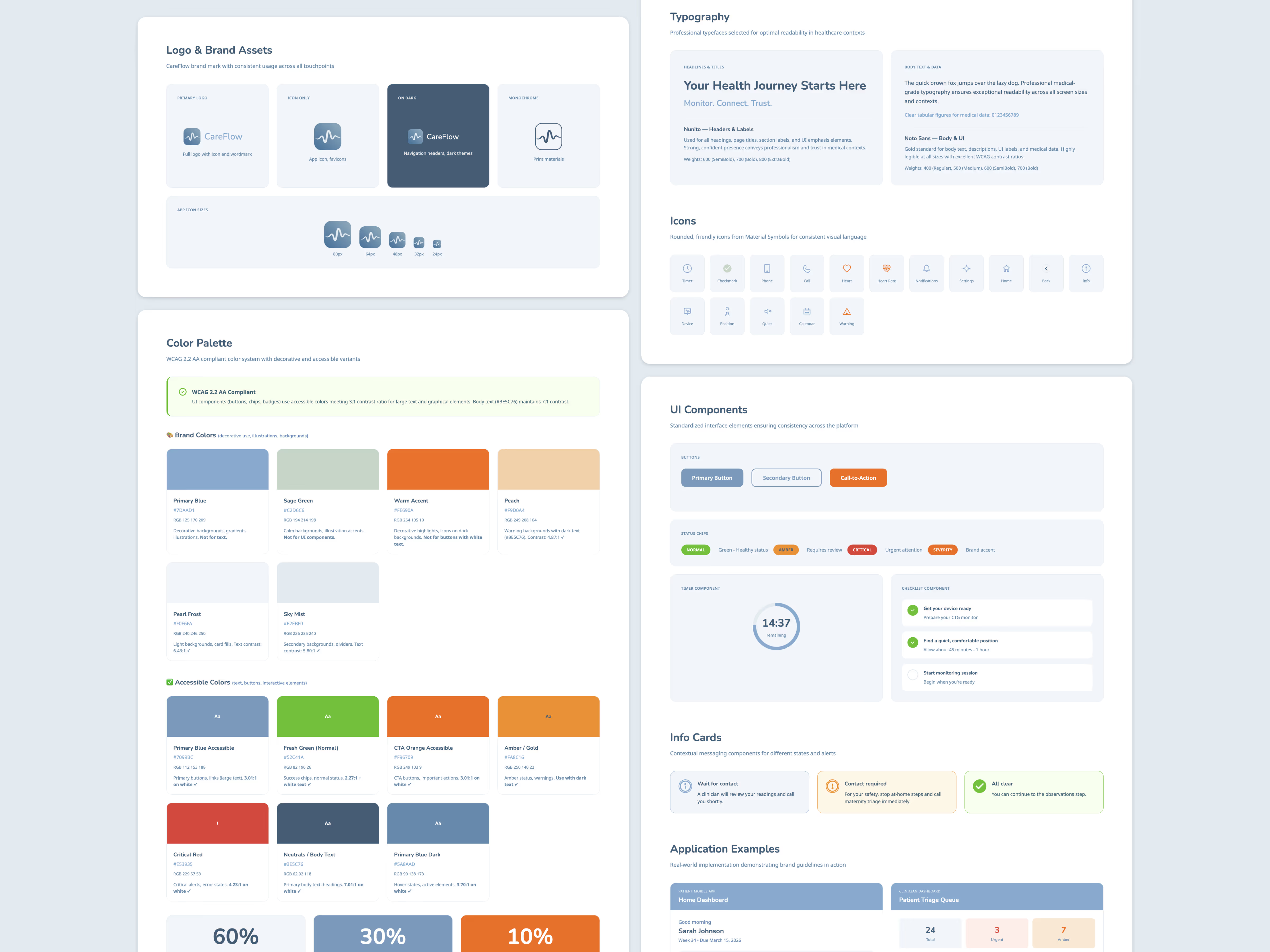

From a legal perspective, I wanted to rely on open-source resources. While not critical for the PoC itself, this decision could set the right course for building an MVP—such as proposing a specific UI framework or design system.

Decision 05

Build a testable, resettable demo environment

I treated the PoC as a controlled simulation, not a one-off prototype. The goal was to make every demo repeatable, debug‑friendly, and safe to iterate under time pressure.

- I used a resettable demo harness to keep sessions clean and comparable between stakeholders.

- I introduced explicit A/B and failure-mode toggles to simulate key scenarios without building real integrations.

The problem we were solving

A research and clinical team at a renowned UK university needed a decision‑grade proof of concept for remote pregnancy monitoring that could be tested and discussed with real clinicians, not just described in slides.

The target users were:

- pregnant patients completing structured at‑home sessions supported by an existing measurement device

- clinicians responsible for reviewing sessions, triaging risk, and deciding what happens next

The UK context mattered. Many people, especially immigrants, face barriers to consistent antenatal care, while the NHS is already under pressure. The team needed a concept that could reduce avoidable workload without creating unsafe expectations.

What had to be proven

- The patient experience must stay strictly non‑diagnostic, focusing on state and next steps, not interpretation.

- The workflow must be operationally realistic for clinicians, including review, prioritization, and accountable actions.

- The system must be testable end‑to‑end, so stakeholders can align on what to build next based on a real interaction, not assumptions.

My role

I owned the PoC end‑to‑end, from clinical context to demo‑ready prototype and artifacts.

- Set up a single source of truth in Notion for inputs, constraints, and acceptance criteria.

- Translated clinical context into explicit guardrails, edge cases, and an event model.

- Designed the PoC as a repeatable, testable demo environment.

- Shipped a demo‑ready interactive prototype using AI‑assisted implementation.

- Delivered hi‑fi Figma screens for communication outside the live demo.

PoC deliverables

Interactive demo

I delivered the PoC as a runnable, dual‑persona interactive demo that covered the full patient → clinician handoff. It was built to support both a fast walkthrough and deliberate safety and edge scenarios, without relying on real integrations.

To make stakeholder review frictionless, the demo was also published as a hosted build so the client could click through it asynchronously without local setup.

Screen recordings

A screen recording was produced to keep the PoC reviewable even when live access was inconvenient or unreliable. It served as a stable, async-friendly artifact for stakeholder feedback and internal alignment.

Implementation repo and reproducible build

The PoC was implemented as a real front-end codebase with explicit demo scaffolding, including a resettable harness, deterministic routing, and mock-driven states and failure-mode toggles. This made the output reproducible in stakeholder sessions and resilient to iteration under time pressure.

High‑fidelity design

I created a minimal PoC branding layer first, including lightweight identity elements, a color palette, and short styling guidelines to avoid a raw component-library look in demos.

Based on that system, I produced high‑fidelity screens as the final presentation layer to communicate key UX moments clearly outside the live demo, while staying consistent with the interaction model shipped in code.

Validation loop

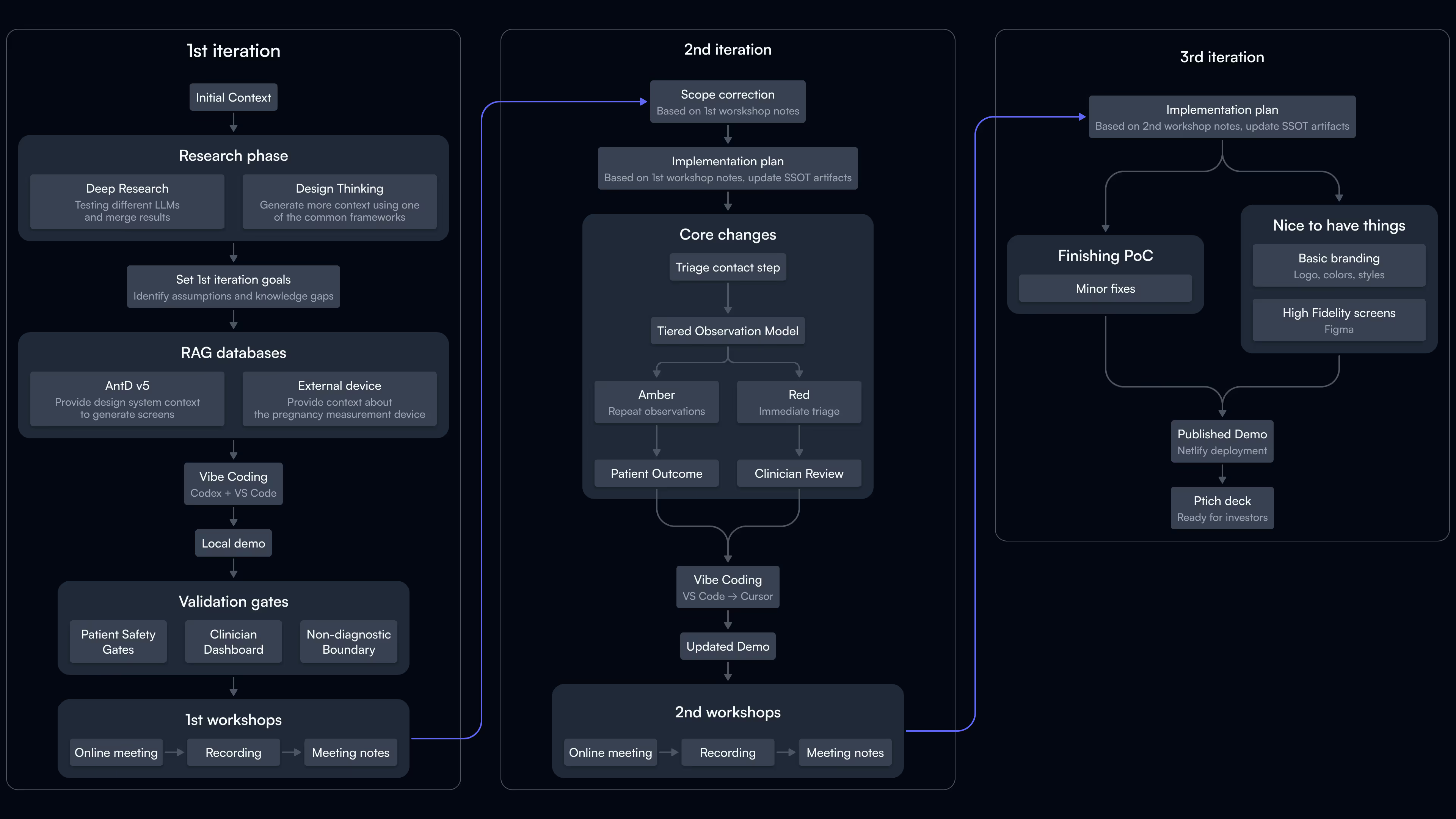

Iteration 01

Ship a simple demo to fill knowledge gaps and clarify assumptions

The first demo validated three fundamentals:

- patient-side safety gates and stop conditions

- the non-diagnostic boundary in patient messaging

- the clinician dashboard as the decision surface for triage and next steps

I presented the local environment in online workshops with the client.

Iteration 02

Translate workshop feedback into clearer patient states and stronger clinician review

The workshop turned gaps into concrete changes:

- Added a clear triage contact step on patient stop screens to reduce friction in high-risk moments.

- Replaced a single warning outcome with a tiered observation model:

- Amber triggers a repeat measurement after 15 minutes.

- Red triggers an immediate triage outcome.

- Introduced an explicit patient outcome for post-submission follow-up, making it clear that a clinician may contact them.

- Expanded clinician-side review with completed examinations and patient context so triage decisions remain visible and accountable.

This iteration also clarified the interaction model: routing happens after a deliberate submit and recommendation step, not during typing.

Iteration 03

Address minor findings, publish the demo, and handle nice-to-have features

Once the core clinical rules were agreed, the focus shifted to change control.

- Converted decisions into an explicit Iteration 3 plan covering screen updates, routing rules, and event-model changes.

- Kept stakeholder sessions reproducible with a resettable demo harness, stable routing, and mock-driven scenario toggles.

- Designed high-fidelity screens for a pitch deck ready to share with potential investors or funding applications.

Key learnings

- Ship a testable end‑to‑end demo early to force precise corrections instead of expanding discovery.

- AI‑assisted workflows need quality gates and change control. The win was making decisions explicit and updating routing, criteria, and event logic together.

- Optimal stack: two‑assistant pipeline—one for the Notion knowledge base, one in a generative IDE with MCP access.

- RAG proved most useful for device and domain documentation, not UI library docs once the IDE assistant could follow library constraints.

- Standardizing the process turned future PoCs into a repeatable system, not one‑off sprints.

Need a PoC in days?

Let's talk about validating your idea fast.